Hi Ryan,

With regards to written specification for the components, I guess it depends on the detail required. We have some material from a design perspective, but this is pretty basic documentation (e.g. how they can be used and how they appear onscreen). From a technical perspective then I think that’s one for Daryl to answer (but sure he’d appreciate any help). I’d be happy to share with you what we’ve got.

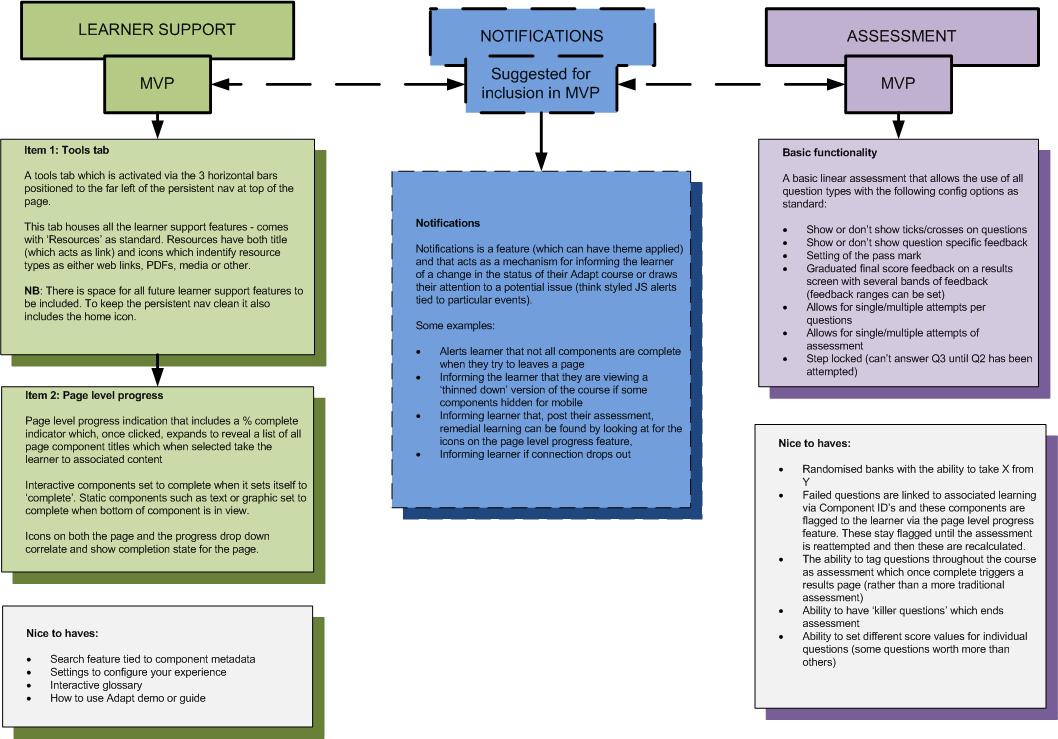

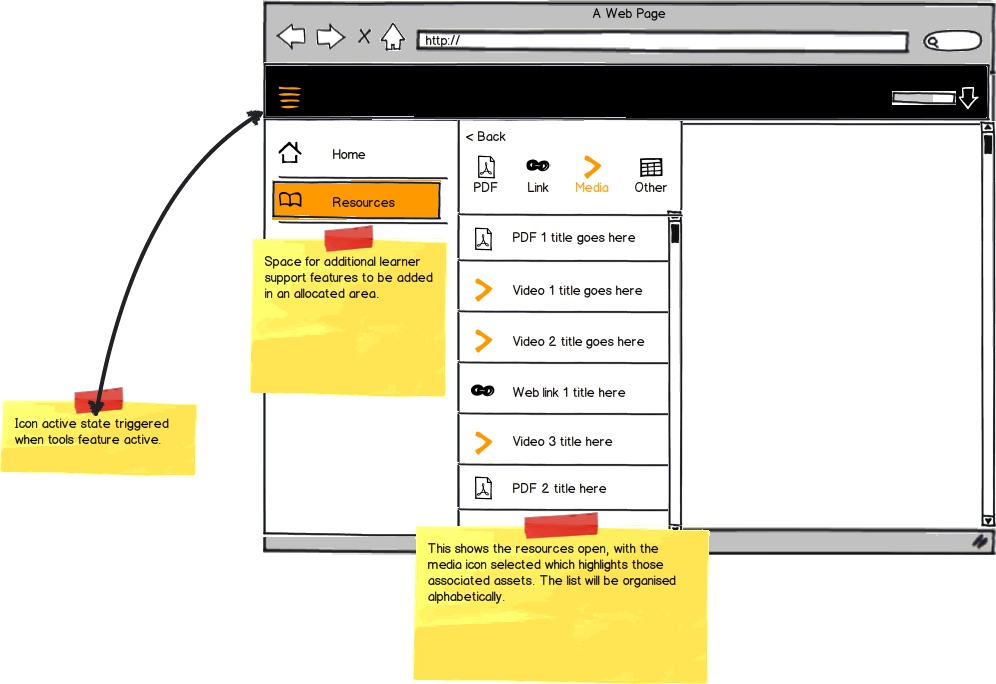

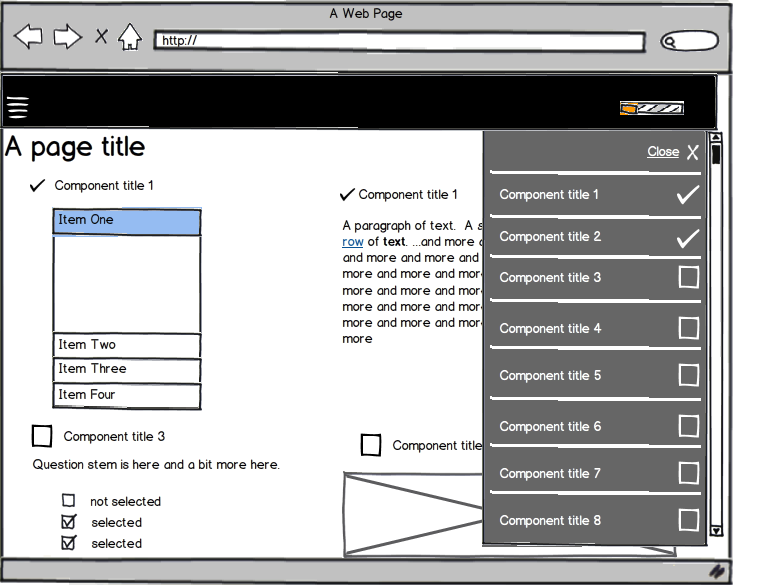

I think there are elements that we could push back to later phases for sure, particularly around learner support features. I’m keen to include triggered components as it really makes for far more varied treatments. However, it’s all negotiable. From my perspective I think as long as the authoring environment can be easily extended to handle the different layouts and learner support features (such as glossary/resources) then it becomes less of a concern.

A basic assessment that allows the use of a variety of question types is a must have. It will also require some configuration options, such as:

- show or don’t show ticks/crosses on questions

- show or don’t show question specific feedback

- setting of the pass mark

- graduated final score feedback on results screen (and number of bands of feedback and there ranges, e.g:

- 1: Fail: 0-60%

- 2: Pass: 61-90

- 3: Merit: 91%>)

- allows for single/multiple attempts

- possibly step locked

- and ideally randomised banks too.

I’ll put together an assessment wish list which elaborates on the above and puts into priority order. In terms of how we’ve done assessments previously, then we’d set a given article to be an assessment, this seemed to be the most flexible and pragmatic approach given the old code base, I don’t know whether this still holds true now works begun on the refactoring.

I’m not expert in authoring tools but when reviewing the wireframes for Adapt’s, I’ll keep an eye out for how we might handle these types of features and volunteer some suggestions.

Thanks,

Paul